Smart glasses provide scalable training data compared with static cameras. Robots learn manipulation tasks directly from human-object interactions captured in everyday environments.

The General-Purpose Robotics and AI Lab at New York University has introduced EgoZero, a framework that uses smart glasses to generate training data for robots. The system leverages egocentric video and spatial tracking to train general-purpose manipulation models without the need for robot demonstration data. The “ego” part of EgoZero refers to the “egocentric” nature of the data, meaning that it is collected from the perspective of the person performing a task.

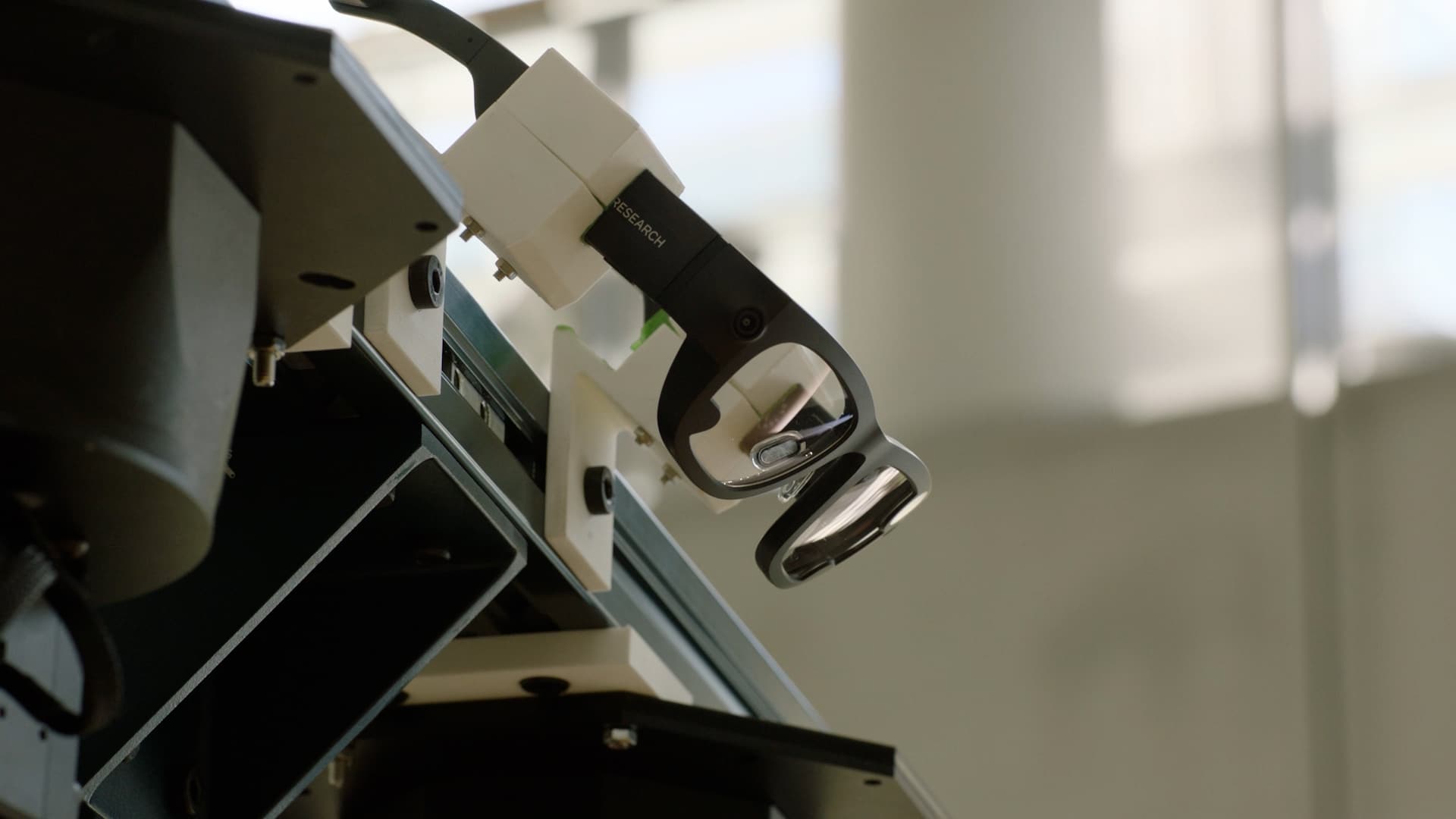

EgoZero operates on egocentric recordings from Meta’s Project Aria glasses. These devices capture continuous first-person video and spatial information as humans perform everyday tasks. The data is processed through a pipeline that localises object points in 3D using camera trajectories and triangulation. Hand pose estimation models provide key points that are converted into “action points,” representing contact and motion vectors.

The system avoids raw image transfer. Instead, it reduces recordings into point-based trajectories in 3D space. This point abstraction bypasses the visual mismatch between human hands and robotic end-effectors. Robot arms then replicate the relative motion of these points with respect to objects, rather than trying to reproduce the appearance of human motion.

In proof-of-concept experiments, 20 minutes of human demonstrations were recorded for each of seven tasks, including pick-and-place actions. Robots trained only on this egocentric data achieved a 70 per cent success rate when deployed on physical tasks.

The architecture provides portability and scalability. Smart glasses capture relevant task details automatically, since wearers orient their view to critical areas. This increases task-relevant data compared with static external cameras. It also removes the need for robot-specific data collection, which is time-consuming and hardware-dependent.

Alongside EgoZero, researchers developed a 3D-printed handheld gripper with a smartphone camera to replicate robotic grasping. This parallel method applies the same point-space tracking principle and offers a low-cost path to larger data collection at scale.

EgoZero represents a step towards scalable datasets of human-object interaction, comparable to internet-scale text data for language models, intended to accelerate general-purpose robotics.